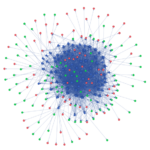

We already have a graphic representation of the inter-connectedness of the Niade posts and pages: a website connectivity graph. As you might expect, because the site is very highly interconnected, that winds up looking like a snarled mess. Much more useful is a knowledge graph: a visual representation of the conceptual (semantic) relatedness between the posts and pages.

Niade knowledge graph

Here is an interactive knowledge graph for Niade. Each ‘node’ is either a post/page (blue), or a tag (green) or a category (orange). You can hover/click/drag to see the relatedness between each of these. The knowledge graph immediately shows you what type of content is most highly represented and how that relates to other content. I have been calling Niade “the fish blog” but the knowledge graph shows it’s much more about Plants and the Shrimphaus and actually not so much about fish at all (although there is a little fishy bit – type ‘fish’ into the search bar – no disrespect to fish).

Interactive knowledge graph — tap a node to select; long-press to open the page.

Click here to open in a new window.

The knowledge graph is searchable which I find is a pretty useful complement to the sitemap.

Is Bacopa Caroliniana an epiphyte?

Bacopa (cyan) vs. Anubias (purple)

Well no, obviously not. That said the knowledge graph was bound and determined to use the same tag for bacopa as for the epiphytes growing on the mountain in the Fireplace Aquarium. ARGH! Software bug?

I did a vigorous investigation and no, it’s not a software bug. I even added a paragraph to the bacopa page emphasising the stem-plant-ness of the bacopa to see if that would make the knowledge graph shift it over to something closer to ludwigia. That did shift the maths a little bit, but not enough. WTF?

But look at this… The bacopa visually makes a big green vertical column on the right of the Fireplace Aquarium. The epiphytes, mostly Anubias barteri ‘Mini Coin’ growing on the mountain sculpture make a big green vertical column in the middle-right of the Fireplace Aquarium. The knowledge graph has no inherent training into plant taxonomy, but maybe it is picking up on the visual relatedness of these plants, in a manner similar to the visual relatedness of visual layers in the aquarium.

Interesting…

Knowledge graph technical implementation

There are various ways to show inter-related content on WordPress and the popular ‘related pages/posts‘ plug-ins generally work by text-matching. But how about a 2026 AI-driven solution using transformer-based analysis?

I engineered a dual transformer * semantic tagging system. First the pages and posts from Niade.com were scraped and passed to an implementation of the SBERT bi-directional transformer. SBERT converted each entry into a 384-dimensional vector representation (embedding) of the overall semantic meaning of the content. These vectors were then grouped using “hierarchical divisive clustering with silhouette‑based split validation” (I don’t really understand what that means, but it proved to be a robustly high-performing option) into groups of highly related content. The grouped pages along with relevant extracted phrases that best matched the overall meaning of the groups were sent to the autoregressive large language model (LLM) Claude3.5 implemented on OpenRouter which was tasked to come up with “the best short name that describes this collected content”. Those names were applied to tags and assigned to most of Niade’s pages and posts – you can see that at the bottom of this post where it says ‘Tagged’ and you can click on the tags on the knowledge graph.

Coding the actual python scripts was done primarily by ChatGPT (with some help from Claude and Gemini). I found Claude 4.5 to be personable and friendly, maybe too much in ‘eager puppy mode’ but at the end of the day I considered ChatGPT 5 to be the better programmer.

* I wrote a maybe not as friendly as it could be introduction to transformer architecture on LinkedIn.

Dual transformer processing

It turns out this dual transformer approach is very highly aligned to the original use case driving development of transformers: human language translation. As described in the seminal paper “Attention is All You Need” a bi-directional (everything attends to everything) transformer converts written language into the mathematical representation of the underlying concepts, and then an autoregressive (subsequent attends to all previous) transformer converts the conceptual representations back into (a different) human language.